Sales Coach

Introduction

Client Background

This project was designed to automate sales agent performance coaching using AI. The original system was a functional proof of concept and could pull call recordings, transcribe them, and attempt basic coaching analysis. However, as real users began relying on the system, various key issues were identified. These include:

- Data wasn’t reliably stored.

- AI responses were unstable.

- Reports weren’t being delivered.

The goal of this engagement was to fix, harden, and scale the system to ensure that it could support daily operations and eventually evolve into a fully autonomous AI coaching agent.

Challenge

The core challenges we faced during the project were mainly around data stability, AI reliability, and communication workflows. These challenges include:

- Scorecard data not being saved correctly to Supabase, breaking all downstream automations.

- OpenAI Assistant timeouts and unpredictable outputs.

- Incomplete integration with weekly/monthly coaching email delivery.

System Design

The first core module handled the ingestion and processing of sales calls. This started with retrieving the agent and call metadata from Supabase and PhoneBurner. After the calls had been located, recordings were passed through OpenAI’s Whisper for transcription.

The resulting transcript was then fed into GPT-4 using carefully prompt-engineered templates that were tailored to the coaching criteria based on objection handling, rapport building, and closing technique. In addition, the prompt was structured to generate both qualitative and quantitative feedback, which allowed the LLM to return a complete scorecard.

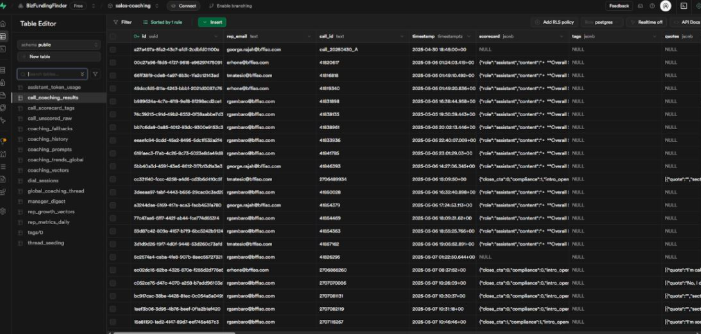

With accurate coaching data in hand, the next part of the system design was organizing and delivering it. For this, data was saved in Supabase using structured JSON formats that matched existing dashboard schemas. Coaching summaries were indexed with relevant metadata that included the call ID, agent, and tags. These summaries were optionally embedded as vectors for future semantic search and memory.

For delivery, dynamic email content was created for weekly and monthly digests. These messages were customized based on the agent’s role, recent performance, and progress against coaching themes. Apart from that, modular steps were used for the digest generation, and this ensured that email payloads could be reused for other delivery channels like Slack or SMS in the future.

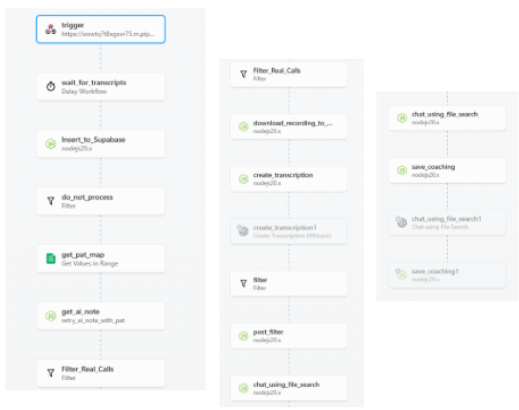

A major part of the project involved overhauling the existing Pipedream workflows. Instead of sprawling flows with minimal observability, the system was broken into clean, testable stages that included:

- Data fetching.

- Transcription.

- Scoring.

- Storage.

- Delivery.

For this, each module had retry logic and output logging for easier debugging. Token usage was optimized by reducing unnecessary prompt steps and stripping redundant metadata before calling the LLM. In addition, reusable code blocks and custom functions were added to standardize how transcripts were formatted, assistant calls were structured, and results were validated before proceeding.

OpenAI’s Assistants feature was central to the entire system, but it had edge cases that needed careful handling. Apart from this, timeouts were common when prompts exceeded length limits or the assistant attempted overly long completions. These were handled by implementing a fallback logic functioned as follows:

- If a response exceeded a set duration, the system would re-prompt with a simplified version or retry with trimmed inputs.

Structured parsing checks were introduced to ensure that responses that did not meet the criteria didn’t crash the workflow or corrupt downstream data. In addition, we redesigned the coaching prompts to be more deterministic as they were guiding the LLM to return labeled sections with consistent headings, which made post-processing far more reliable.

Testing was essential to ensure trust in the coaching outputs. To ensure proper testing, transcripts were run through the entire pipeline to verify accuracy and structure of coaching feedback. Supabase storage was validated with mock and real call data to confirm indexing, retrieval, and data visibility on dashboards.

Email digests were test-sent with multiple user roles to check message content and dynamic rendering. Every step was logged with timestamps and error tracking to ensure a tight feedback loop between test runs and flow tweaks. This setup made it easy to onboard new sales workflows, like the BFF enrollment reps, with minimal risk.

Development Process

We approached the rebuild step by step, making sure each part of the system could stand on its own before putting everything together. First, we broke the sales coaching workflow into smaller modules, like transcription, scoring, storage, and delivery. By developing and testing each module separately, we could spot problems early and fix them without risking the rest of the system.

We also set clear rules for how data moved in and out of each module so that the flow stayed consistent. Using GitHub for version control kept our work organized, and short development cycles helped us make quick improvements based on what we saw in testing. After the core modules were working smoothly, we focused on making the system faster, more reliable, and more cost-efficient.

We added retry logic to handle API timeouts, fine-tuned prompts to cut token use, and put in place strict checks to stop bad AI responses from causing errors later in the workflow. For Supabase, we used schema validation and automated indexing to make sure data showed up correctly in dashboards. Throughout development, we tested with real sales calls, checked every output, and ran regression tests after each change. This steady, hands-on process helped us move from a fragile MVP to a tool that’s ready for daily use.

Launch And Results

After cleanup and rebuild, we ensured the system was production-ready and fully operational, and when launched, the following results were obtained:

- Successful write-through from PhoneBurner to Whisper to GPT to Supabase.

- Automated coaching digests were sent weekly/monthly with high personalization accuracy.

- System now ready for onboarding of BFF enrollment reps via GoHighLevel.

Automated coaching digests sent with high personalization.

New GoHighLevel integration workflow added for BFF enrollment reps.

Clean and modular workflow stages created in Pipedream.